Last week Apple default-enabled all apps (native and 3rd party) to Siri “learning”. I thought I’d wrap up with a reflection on Apple’s action and whether the concern was warranted.

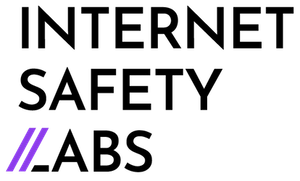

The initial reaction for most of us was, “Oh great. Yet another forced offering to the great AI gods. No thank you!” I and others had a strong kneejerk reaction of “this is NOT ok.” Was the initial reaction warranted? Figure 1 shows my original LinkedIn posts below, which were updated real time as I continued to explore the situation.

Figure 1

When I took a closer look, Apple clearly tries to make Siri as “edge-y” as possible—i.e. executing independently on the device to the extent possible. But how safe is the architecture? What data exactly gets shared and with what parts of Apple’s infrastructure? This is the problem with all the large platforms: we just can’t observe server-to-server behaviors within the infrastructure.

Here’s what I do know. Apple’s action was surprisingly presumptuous and disrespectful to their users. It was markedly off-brand for the privacy-evangelizing company, and their actions (or inactions, as the case may be) since the time this flared up are telling.

After the dust has settled, I stand by my recommendation to disable Siri learning, and I’m less concerned about Siri suggestions. Here’s why.

- Apple did this in a sneaky way. Not at all on-brand for a privacy-touting company.

- It’s at least the second time Apple behaved in this way in recent days. On January 3, 2025, The Register reported that Apple auto-opted everyone into AI analysis of their photos https://www.theregister.com/2025/01/03/apple_enhanced_visual_search/

- You may have missed it, but on January 8, 2025 Apple issued a press release pretty much saying, “Siri’s super private—hooray!” This is most likely when the auto-opt in happened, though I have no supporting evidence. https://www.apple.com/newsroom/2025/01/our-longstanding-privacy-commitment-with-siri/ Coincidence? Hard to think so. Also the language in the press release raises more questions than answers:

-

- “Siri Uses On-Device Processing Where Possible” Great. We’d like to know more about that.

- “…the audio of user requests is processed entirely on device using the Neural Engine, unless a user chooses to share it with Apple.” Like, if they toggle a setting for Siri Learning to “enabled”, for example? Like that kind of “choosing” to share it with Apple?

- “Apple Minimizes the Amount of Data Collected for Siri Requests” Ok, but it’s not zero.

- Goes on to say, “certain features require real-time input from Apple servers.” Careful wording here. I’d like more info on the data that gets sent to the Apple servers, and how it’s used.

- The press release segues right into this: “a random identifier – a long string of letters and numbers associated with a single device…is used to keep track of data while it’s being processed, rather than tying it to a user’s identity through their Apple Account or phone number”. I find that phrasing curious. Note that it says, “associated with a single device” and NOT “associated with a single device for a single query”. Is the identifier long-lived? Is the identifier in fact some kind of internal Apple universal identifier for you—a join key assembling an ever-growing biographical log of you [like virtually everyone else in martech and edtech]? Any kind of permanent, unique identifier is risky and don’t let anyone tell you otherwise. I really hope I’m wrong on this.

-

-

- “Private Cloud Compute” This sounds promising but we’re going to need some more info about this.

- “…many of the models that power Apple Intelligence run entirely on device.” But apparently some don’t. Again, going to need more details here.

- It was obnoxiously difficult to unwind this default opt-in. You couldn’t just go to Settings -> Apps, you also had to go to Settings -> Siri to get all the apps. Think about this. This is Apple, the company who (according to many) was the epitome of usable design. I think about Jony Ive and Steve Jobs—would they stand behind this kind of user interaction?

- Siri learning was enabled for Every. Single. App. Including native Passwords, Phone, Apple Home, and Health Data. I get that there are legitimate reasons why Siri learning should be connected for every app—e.g. people who must use voice as their primary mode of device interaction. I’m surprised, however, that they didn’t think it might be a tad risky to be auto-enabled for things like Passwords for everyone. It feels weirdly clumsy for a company not known for clumsiness. (Unless you’re building a training set for an LLM in which case it makes complete sense.)

- NB: I still can’t find a way to disable it from Calendar.

- Disabling Siri learning and suggestions has done some weird things to non-voice interactions on my phone which makes me wonder just how interwoven Siri is within all modes of user interaction, beyond just voice interaction. Example: voice note recorder stopped showing text on the record button and it no longer supports “resume [recording]”; it now just ends the recording and I need to start a new one if I want to extend the recording with a new idea. I thought Siri was just supposed to be about voice interaction? Apparently not. (Note: upon re-enabling Siri Learning and Siri suggestions, the text under the button and the resume function re-appeared. Huh.)

- Apple Magazine’s (November 25, 2024) piece on the forthcoming Siri revamp reinforces the fact that the boundaries of what is and isn’t “Siri” are hazy indeed (Figure 2). The story affirms that the Siri update touches multimodal interaction capabilities.

- Apple Magazine’s (November 25, 2024) piece on the forthcoming Siri revamp reinforces the fact that the boundaries of what is and isn’t “Siri” are hazy indeed (Figure 2). The story affirms that the Siri update touches multimodal interaction capabilities.

Figure 2: Source https://applemagazine.com/siri-engine-revamp-what-apples-next/

One starts to wonder, what isn’t Siri when it comes to user interface and interaction? And also wondering where Siri begins and ends in terms of software execution and data access. This year is already touted as the year for agentic AI—what could be more agentic than AI-infused Siri?

- For me, the biggest smoking gun is that late last year Apple announced that Siri will be powered by Apple’s LLM (https://superchargednews.com/2024/11/21/llm-siri-to-launch-by-spring-2026/ ). First off “powering Siri” with Apple’s LLM already sets off some alarm bells. The timing of this forced opting–in to Siri Learning seems quite aligned with the development timeline of an LLM said to be launching in late 2025/2026. “The new Siri will be powered by Apple’s advanced Large Language Models (LLM), which will make the digital assistant more conversational and ChatGPT-like.” I can’t really imagine a world where Apple wouldn’t train their LLM off their current customer base.

- Siri Architecture: Because I love architecture and because I can remember when Siri was a baby 3rd party app, I wanted to go back and take a brief look at its evolving architecture. As of 2017, the Siri architecture was a poster child of typical app client-server architecture and the reliance on the server is clear (Figure 2), with even the trigger words audio being sent to the server.

Figure 3: Source https://machinelearning.apple.com/research/hey-siri

2017 is, of course, ancient times in developer years and Apple has been relatively transparent about how they’ve been rearchitecting Siri to [at least] keep the trigger word detection on the device (https://machinelearning.apple.com/research/voice-trigger).

- The last thing I want to mention is that Apple has been preternaturally silent about this whole thing. I find that remarkably off-brand. Unless Siri and Apple AI are inextricably interwoven and this was actually a training set creation exercise, in which case, probably best to keep silent.

Is Apple training their LLM via the forced Siri Learning opt-in? Maybe. Will be good to hear from them on this. And while we’re at it, I’d love a new “revamped” Siri and Apple LLM architecture diagram/document, with greater transparency and detailed information on the functionality distribution and data sharing between the device and back-end servers and services. Please and thank you.