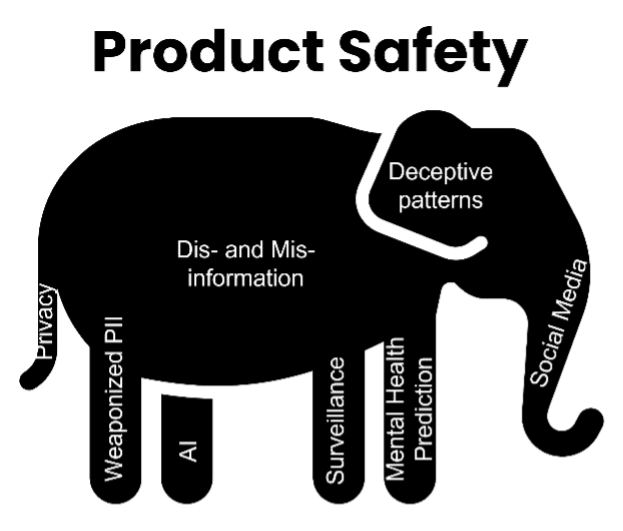

There’s a popular parable about five blind men touching various parts of an elephant to identify what they are unable to see. One, touching the tail, proclaims, “Oh, this is long and thin with straw on the end—it’s a broom.” One, touching one of the massive legs announces, “Ah, this is undoubtedly a pillar.” Another touching the trunk says, “This is a hose.” It is, of course, none of those things—though those things aren’t totally wrong.

This is one of my favorite parables as it highlights the difficulty of charting novel terrain and the value of holistic context. And I find it uniquely apt in recent days as the world grapples with the harmful aspects of software and software-driven technology [i.e. all technology].

Twenty-plus years ago we started off touching one piece of harmful software and proclaiming: “Ah! This is a privacy!” Over the years, we’ve felt other parts of the elephant. Currently, we are all aflutter with our awakening around, “Ah! This is AI or automated decision-making.” And now from the US Surgeon General, “Ah! This is social media harms!” And like the blind men, it’s both true and not true. These proclamations aren’t necessarily wrong or useless so much as they are fragmented and incomplete. The beauty of the elephant parable is that it doesn’t deny the non-elephant parts, per se—as I don’t here either. But it makes clear the importance of perspective and contextual frames. Which is my point. We can continue to view the parts in isolation, but what happens when we view them through a holistic lens of product safety? I suggest that product safety framing will help us move faster to realize safer software, keeping more people safer sooner.

Because product safety is the whole elephant.

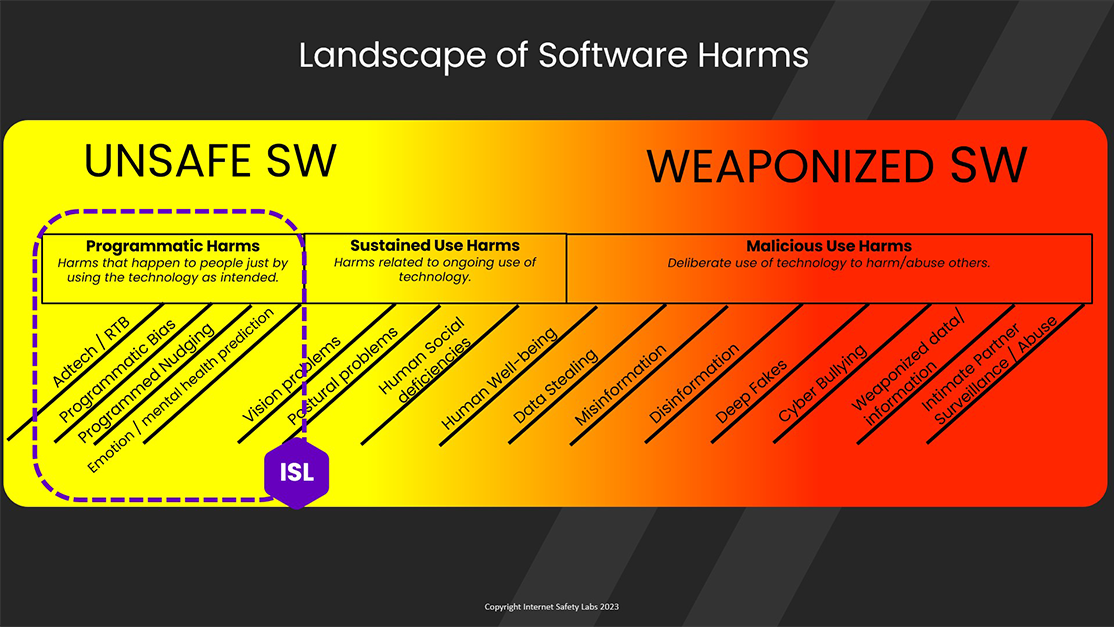

I’m not sure why product safety as a framing mechanism isn’t gaining more traction. Sure, the legal specter of product liability creates a powerful opposing friction (in which case product safety is the elephant that abides in the living room). But the framing of product safety is powerful and elegant, neatly tying together the range of harms that software has uniquely ushered into existence (Figure 1 conveys a sampling of the ways software driven technology can be harmful).

We innately understand what product safety means as well as our expectation of it. Starting in the industrial revolution, product safety has become nearly an inalienable human right. Objective measurement of product harms and an agreement on reasonable product safety is a crucial leveling force between producers and consumers.

Independent product safety testing is a force that counterbalances

the inordinate power wielded by industry.

As I reflect on the last 25 years or so, I also note that things like “human-centered design” and “humane technology” have come into focus. Product safety is also part of the glue that intimately considers and necessarily involves humans in the product lifecycle in a way we haven’t adequately done in the past. When we say “product safety” we know we’re talking about human safety, so inseparable the notion. Thus, we never use a phrase like “human-centered product safety” because it’s redundant.

Figure 1

How Did We Get Here?

My mantra for the past many months is: we just didn’t know. The very words “product safety” were never uttered in my earliest days of software development in the late 1980s and 90s. This is particularly meaningful considering my foundational career years were spent at Motorola, one of the biggest and strongest adopters of manufacturing quality measurement. To Motorola’s credit, they were also one of the early adopters of measuring software quality. BUT those quality measurements (the Software Engineering Institute’s Capability Maturity Model, eg.) never included human safety.

We simply never put the words “product safety” and “software” together. They were utterly heterogeneous things, like “apples” and “plywood”. They didn’t go together in our minds or in our discourse—with one exception of course: software that can render hardware dangerous such as power and navigation systems. Outside of those, it just never occurred to us.

One day last year after a diatribe that I’m prone to when provided an audience no matter how small—in this case it was with our PR firm—Mike observed when I paused for breath:

“Just because ‘soft’ is in the name doesn’t mean it can’t hurt you.”

That is a near perfect encapsulation of the gaping omission in our thinking as software engineers at the time.

What Next?

Fast forward to today and it’s clear that the animating force of software acting on the programmability of the human mind at staggering scale is an alchemy capable of great harm indeed. This isn’t to say that we developers were or are malicious in our undertakings. We just didn’t—and sadly, still don’t—know. Nor did we or do we adequately measure risk of harm from software. But that’s changing.

It’s wonderful that we’re waking up. The next step is to realize that the elephant isn’t “privacy” or “AI” or whatever.

The elephant is product safety. This framing offers a focusing function that allows us to move from principle to measurement; from ignorance to awareness when it comes to what’s happening under the hood of technology. And then we can move from awareness to demand for safer products. It’s not magic, it’s measurable.

And that’s the really good news: it is measurable. Yes, complicated. Yes, controversial and undoubtedly painful at the start. But if we’re so clever to build this stuff, we’re certainly clever enough to objectively measure it, decide on thresholds for reasonable safety, and continuously monitor and publish the observable facts.