In our continued effort to educate people about the risks from data brokers this blog post provides a list of authoritative news stories and research about a specific type of data broker that creates unique risks for people and communities: location data brokers.

Location data brokers have exploded in popularity over the last decade. There are currently hundreds of data brokers across the U.S. who are selling location data, and dozens of intermediaries providing “location data broker-broker services” where they connect buyers and sellers, with scarcely any protections or notice to the people whose data is being collected, shared, and sold.

Due to the widespread commercialization of location data, there have been multiple serious scandals concerning unscrupulous brokers making unsafe data available for sale. Read on below for our tips on protecting your data and (for app builders) ensuring that you are protecting user data.

Location Data Broker Scandals in the News

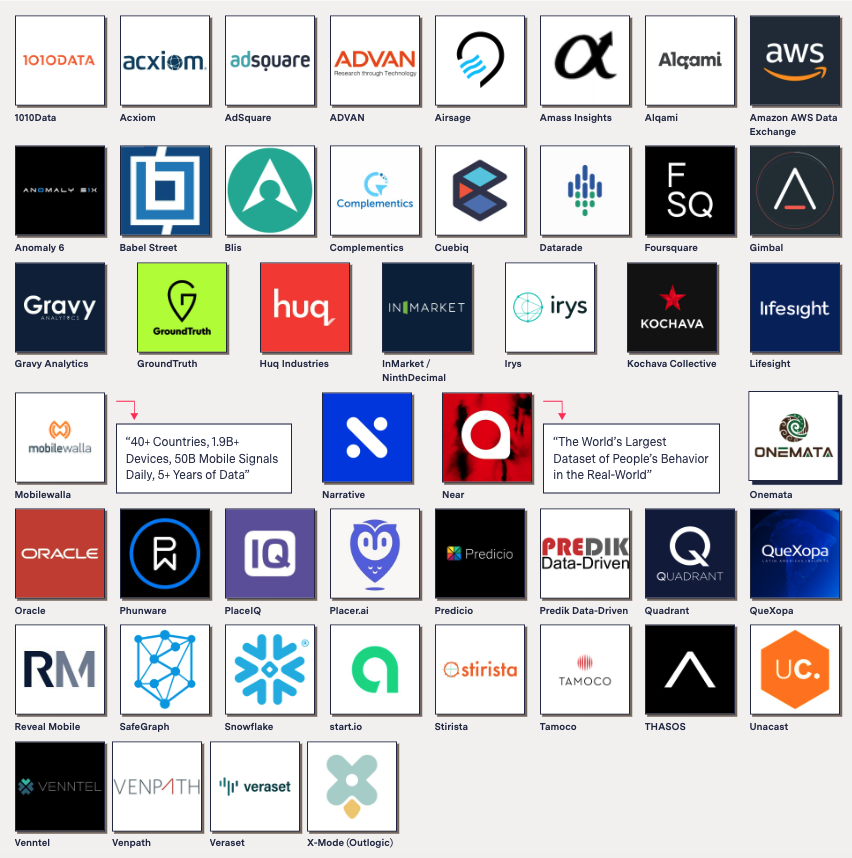

“There’s a Multibillion-Dollar Market for Your Phone’s Location Data” – The Markup provided one of the definitive deep-dives into location data brokers, helping to compile a list of several dozen niche data brokers, with details on each of them. Countless companies included in this September 2021 article have faced scandals both before and after this research was published . The Markup does a masterful job explaining the magnitude of this dystopian reality.

Image from The Markup’s “There’s a Multibillion-Dollar Market for Your Phone’s Location Data” article.

“Twelve Million Phones, One Dataset, Zero Privacy” – The New York Times conducted a groundbreaking investigation by purchasing data from location brokers and tracking people and their patterns across the U.S., including people who visited the White House, the Pentagon, the NY Stock Exchange, and other sensitive locations.

“Top Catholic Priest Resigns after Phone Data Tracked to Grindr” – Data shared from the popular Grindr dating app was used to identify and dox a priest, with the NY Post accurately describing the scandal thusly: ”Thanks to data streams, the Lord is no longer the only omniscient one.”

“Data Broker Is Selling Location Data of People Who Visit Abortion Clinics” – Vice Motherboard broke news in May 2022 that location data broker SafeGraph was selling data about people who visit abortion clinics, which could be used to dox and identify individual patients. Vice also broke news the same week that Placer.ai was selling similar location data about people who visit abortion facilities, both sources stopped after the reporting.

“The Texas Abortion Ban Could Force Tech to Snitch on Users” – The Protocol took on the topic of tracking users who access healthcare services with some eye-opening details about what tech companies were doing to support employees, posing a complex question to readers “… what about Uber and Lyft? Would they pay for drivers’ legal fees if they’re sued for giving women a ride? For now at least, tech companies aren’t answering any of these questions.” The article went on to explain how many of the companies are trying to figure out the impacts of new laws while also potentially putting customers at risk due to the complexities of technical tracking and the relative ease of acquiring location data from the services through legal requests.

“Collecting Your Location Data: Why It’s Worth So Much” – On this Wall Street Journal podcast, expert reporters dug into the details of X-Mode Social, a company selling location data to the U.S. government , including data from both Google and Apple phones to be used for intelligence purposes.

“Tim Hortons coffee app broke law by constantly recording users’ movements” – This Ars Technica piece came out the day we were planning to post this blog. This popular Canadian coffee app was tracking and recording people’s location “’every few minutes of every day,’ even when the app wasn’t open, in violation of the country’s privacy laws.”

What You Can Do as a User of Mobile Apps

The harm of sharing any type of data typically comes from correlating the data to you—a unique individual—and adding it to a vast, historical, living profile of information about you. This happens in many ways, most of which are neither understood by consumers nor readily apparent to them. Location information, by definition, means “person X is/was at location Y.” It is by its nature perhaps the most sensitive of all types of personal information.

One way to keep location information safe from unintended third parties is to break the correlation, to “de-identify” the location data so that it’s no longer correlated to any individual person.

The most common way that location data is associated with a unique individual is by using the “Advertising ID” on mobile phones. The good news is that there are ways to disable that identifier on both iOS and Android phones. The Electronic Frontier Foundation (EFF) created a helpful resource for resetting your “Advertising ID” on both Android/Google + iOS/Apple phones, “How to Disable Ad ID Tracking on iOS and Android, and Why You Should Do It Now”.

Finally, since a large portion of the data sold through location data brokers is acquired in mobile apps, we always urge people to be selective about what apps they download, limit the permissions you give those apps when asked, and delete apps after you are done using them. It’s very challenging to completely avoid being tracked by location data brokers, but small steps on your own personal devices can reduce some of your risks.

What You can do as a Maker of Mobile Apps

At ISL, we believe that the onus shouldn’t be on people to keep themselves safe online, and real change can more effectively happen at the app developer level. It’s even more impactful when change happens at the platform level. If you’re a maker of technology, this section is especially for you.

PLATFORM CHANGE: One thing Android could do immediately which would mitigate an entire sector of data brokers is to eliminate the ability for SDKs within apps to “see other apps installed”.

As an app maker, first and foremost, be sure to practice location information minimization:

- Do you really need location information? Just because you can, doesn’t mean you should. Our research shows that premature location detection is a significant turn off to users.

- If you really do need location information, use the least precise level of location that will satisfy your genuine needs to provide the expected service to the user. Here’s a report of an app (Yik Yak) shockingly using location accuracy within feet. Be honest about what level of data you really need.

- Do you just need a country?

- Do you just need a zip code?

- Precise location should be the exception not the rule.

- Remember that whatever level of location information your app has access to, so do all of your integrated SDK partners. Are you monitoring their use of your users’ location? If not, you should be.

Next, be sure to practice Identification Minimization (Safe Attribute #3). In the Yik Yak story above, the service purported to be anonymous, but—in addition to tracking location down to a foot-level—it was also uniquely identifying users. See our Attributes of Safe and Respectful Commitments for more information on appropriate and proportional identification.

Other things you should do:

- Conduct a privacy assessment to ensure that users can’t identify the location of other users through simple features.

- If your service provides “local content” always conduct extra tests to ensure that someone’s exact location can’t be determined through quickly changing their location or sending other types of spoofed location data. If users can see local content assume that an adversary will try to use this to track someone without their permission and dox the location of the person behind the content.